How to Make the Universe Think for Us

Posted on Monday, 28 July, 2025

• By neusler

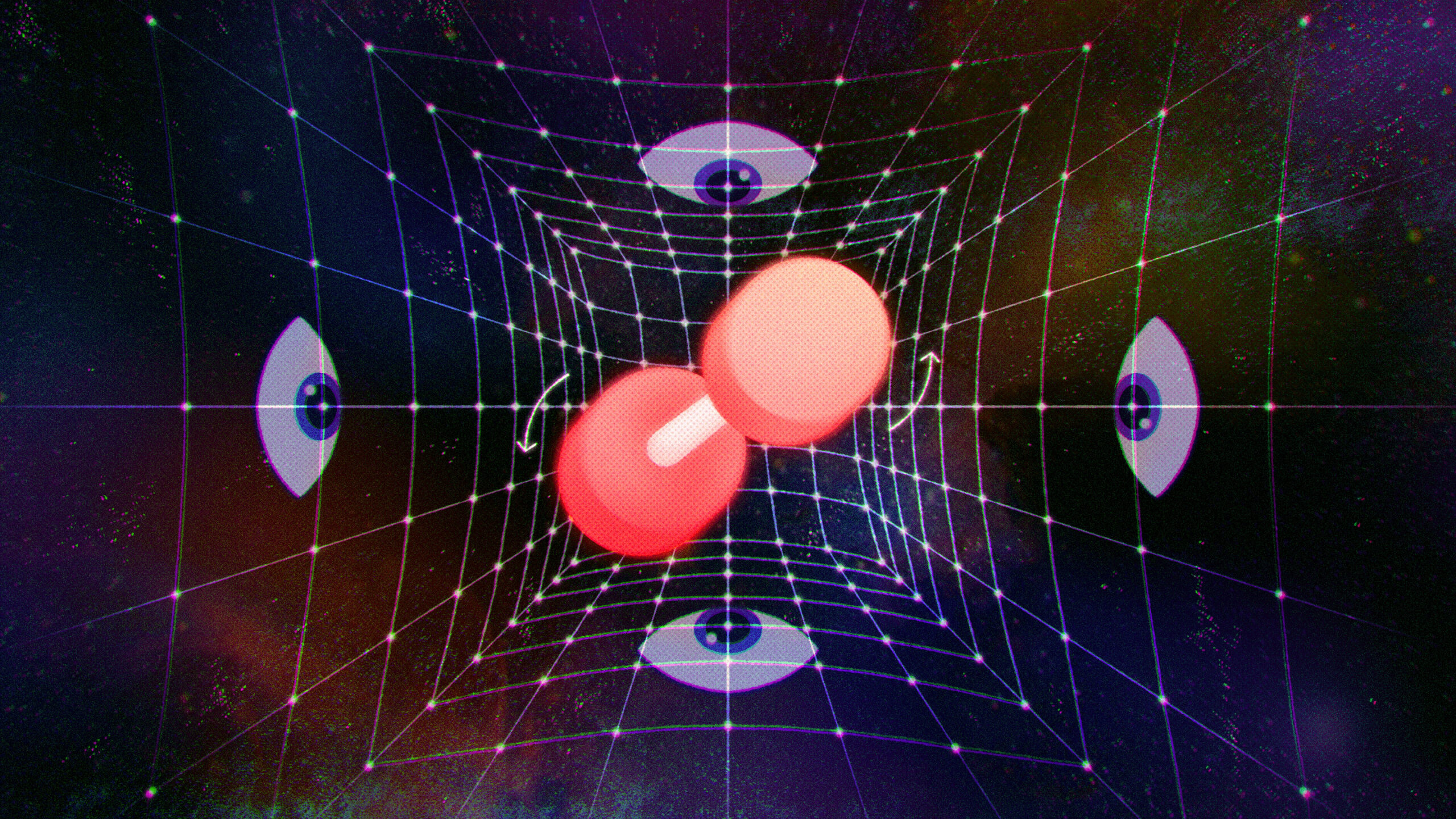

Physicists are building neural networks out of vibrations, voltages and lasers, arguing that the future of computing lies in exploiting the universe’s complex physical behaviors.

Inside a soundproofed crate sits one of the world’s worst neural networks. After being presented with an image of the number 6, it pauses for a moment before identifying the digit: zero. Peter McMahon, the physicist-engineer at Cornell University who led the development of the network, defends it with a sheepish smile, pointing out that the handwritten number looks sloppy. Logan Wright, a postdoc visiting McMahon’s lab from NTT Research, assures me that the device usually gets the answer right, but acknowledges that mistakes are common. “It’s just this bad,” he said.

Despite the underwhelming performance, this neural network is a groundbreaker. The researchers tip the crate over, revealing not a computer chip but a microphone angled toward a titanium plate that’s bolted to a speaker. Other neural networks operate in the digital world of 0s and 1s, but this device runs on sound. When Wright cues up a new image of a digit, its pixels get converted into audio and a faint chattering fills the lab as the speaker shakes the plate. Metallic reverberations do the “reading” rather than software running on silicon. That the device often succeeds beggars belief, even to its designers.

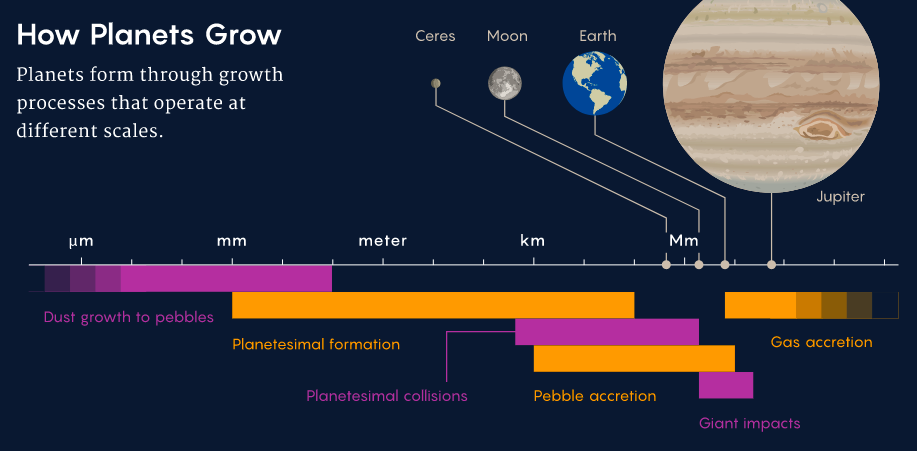

When it comes to conventional machine learning, computer scientists have discovered that bigger is better. Stuffing a neural network with more artificial neurons — nodes that store numerical values — improves its ability to tell a dachshund from a Dalmatian, or to succeed at myriad other pattern recognition tasks. Truly tremendous neural networks can pull off unnervingly human undertakings like composing essays and creating illustrations. With more computational muscle, even grander feats may become possible. This potential has motivated a multitude of efforts to develop more powerful and efficient methods of computation.

When you want the network to read a digit — 4, say — you make the first layer of neurons represent a raw image of the 4, perhaps storing the shade of each pixel as a value in a corresponding neuron. Then the network “thinks,” moving layer by layer, multiplying the neuron values by the synaptic weights to populate the next layer of neurons. The neuron with the highest value in the final layer indicates the network’s answer. If it’s the second neuron, for instance, the network guesses that it saw a 2.

To teach the network to make smarter guesses, a learning algorithm works backward. After each trial, it calculates the difference between the guess and the correct answer (which, in our example, would be represented by a high value for the fourth neuron in the final layer and low values elsewhere). Then an algorithm steps back through the network layer by layer, calculating how to tweak the weights in order to get the values of the final neurons to rise or fall as needed. This procedure, known as backpropagation, lies at the heart of deep learning.

Through many guess-and-tweak repetitions, backpropagation guides the weights to a configuration of numbers that will, through a cascade of multiplications initiated by an image, spit out the digit written there.

Comments

info: your comment will be sent to the site admin for moderation

Smarter, faster on what matters.

- About us

- Advertise with us

- Careers

- Contact us

- Newsletters

- Poscast advertising

- Cookie Settings

neusler

© 2023 NEUSLER, a neumeral technologies company. All rights reserved.

KOCHI, INDIA

Follow us